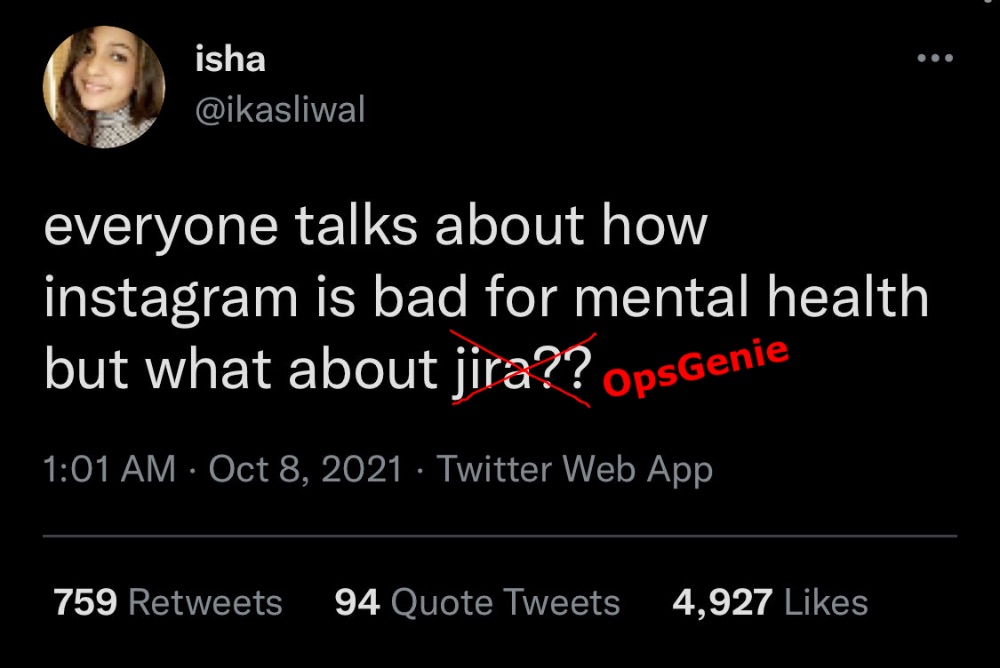

I started my 24/7 (7 days a week) on call shift at work today. I will be responsible for our production code from Monday till Monday. What this means is that anytime between this Monday and next Monday, I can be summoned by opsgenie by an incident happening at work.

Today, I had a total of 4 alerts.

I started my shift at 10:00am and at 10:05am I was greeted by my first critical. It was an alert about all pods for a particular service being in a terminating state.

For the past 2 weeks, I’ve been working on my Kubernetes skills and it definitely came in handy. I was able to check the deployments, the pods in question, restart the terminating pods and see if that helps and also check various logging and monitoring platforms like Grafana, Graylogs etc.

In the space of an hour, 2 criticals had gone off. Thankfully, it was during work hours so I got the team in question in the loop.

I had a relatively quiet day after the 2 criticals in the morning.

The 3rd critical came in 8:02PM, just when I had finished eating pounded yam and egusi soup. Same issue as the first two criticals that happened earlier in the day. There was a delay in the sync of pod state and it was showing as terminating when there were actually separate pods alive.

I now see why voucher squad’s must win for Q4 is to reduce their alert noise because it’s quite crazy.

The 4th critical was 9:09PM. Kinda similar to the 3rd but easier to mitigate. Restarting the errored pod did the trick.

And the sound from opsgenie is one that cannot be mistaken. It sounds like an alarm at an army barracks camp (trust me, I would know)

I hope to have a good night sleep through the night with no opsgenie alert. Amen